Artificial Intelligence continues to accelerate across every industry—but one challenge remains consistently underestimated: AI hallucinations.

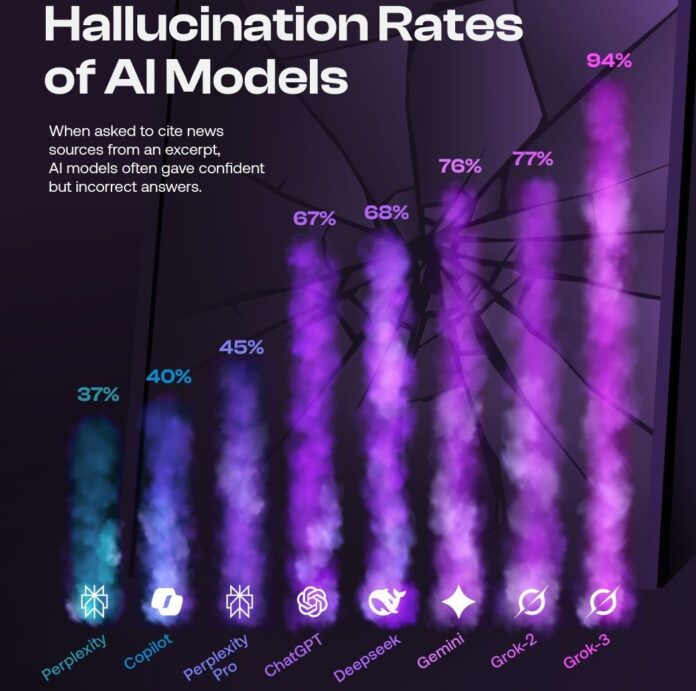

A recent analysis from the Columbia Journalism Review (March 2025) highlights something every professional should know:

➡️ AI models often produce confident answers that are completely incorrect.

In the study, models were asked to cite news sources from given excerpts. Instead of acknowledging uncertainty, many supplied fabricated references, made-up articles, or incorrect citations.

And the numbers speak for themselves.

🔥 Hallucination Rates Across Popular AI Models (2025)

The study shows significant variation across models:

-

Perplexity: 37%

-

Copilot: 40%

-

Perplexity Pro: 45%

-

ChatGPT: 67%

-

Deepseek: 68%

-

Gemini: 76%

-

Grok-2: 77%

-

Grok-3: 94%

Even the most advanced systems are still far from being completely reliable when it comes to fact-based or source-dependent tasks.

🤖 Why Do AI Models Hallucinate?

Even in 2025, AI models operate on probabilistic predictions, not verified truth.

They:

-

predict the most likely sequence of words,

-

do not always have access to real-time validated sources,

-

may create information when the prompt demands a specific answer.

This becomes especially problematic in fields like journalism, Telecom, Healthcare, Law, and Data Analytics—where accuracy is not optional.

⚠️ What Are the Risks for Organizations?

For businesses integrating AI into daily workflows, hallucinations can lead to:

❌ Incorrect insights or recommendations

❌ Misleading analytics

❌ Wrong technical assumptions

❌ Compliance or safety issues

❌ Loss of trust from customers and employees

In critical environments—Telecom networks, cybersecurity, predictive analytics, or infrastructure planning—these errors can have significant operational impact.

✔️ The Right Approach: AI as a Copilot, Not Autopilot

AI is an incredible productivity multiplier.

But it must be used with the right mindset:

-

Verify references and sources

-

Implement human-in-the-loop validation

-

Use AI to speed up analysis, not to replace expertise

-

Train teams to understand AI limitations, not just its strengths

This is why companies around the world are now investing in AI literacy for their technical teams.

Don’t miss our AI in Practice and 5G Data analytics training to learn more about this technology

Benefit from Massive discount on our 5G Training with 5WorldPro.com

Start your 5G journey and obtain 5G certification

contact us: contact@5GWorldPro.com